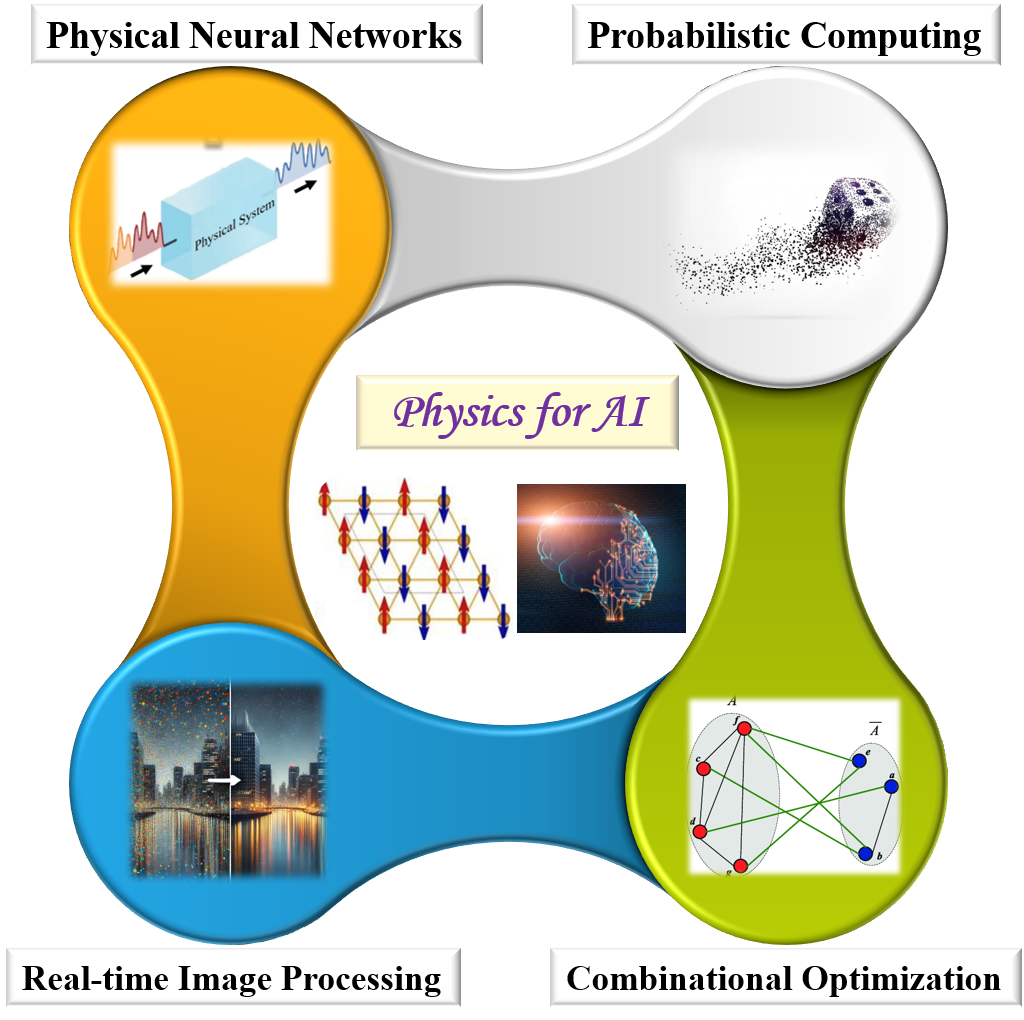

“Physics for AI” is a rapidly emerging interdisciplinary research direction worldwide in recent years. Its core concept is to harness the rich degrees of freedom and dynamical properties of physical systems to design and implement intelligent information processing functionalities. By controlling physical processes such as quantum materials, nonlinear media, and phase transition systems, it becomes possible to emulate and enhance the learning and reasoning mechanisms of neural networks at the hardware level. This approach drives the development of adaptive and energy-efficient intelligent algorithms and computing architectures, paving the way for a new paradigm of artificial intelligence computation beyond the conventional von Neumann architecture.

Our research focuses on the following two directions:

1. Physical Neural Networks (PNNs): We explore the use of intrinsic dynamical properties of physical systems—such as CMOS resistive networks, magnetic materials, and phase-change materials—to achieve highly energy-efficient neural network computing. Our goal is to develop physical learning mechanisms that do not rely on traditional backpropagation, thereby enabling novel brain-inspired intelligent architectures. In parallel, we conduct comprehensive research on the theoretical modeling, algorithm design, and physical implementation of physical neural networks.

2. Ising Machines: Aiming to efficiently solve combinatorial optimization problems, our group focuses on the application theory of the Ising model in optimization and intelligent computing, the design of advanced optimization algorithms, and hardware-accelerated implementations. We explore high-performance, reconfigurable Ising computing platforms based on spin systems, probabilistic bits, and FPGA technology. The resulting architectures are designed to offer high scalability and energy efficiency for both optimization problem solving and AI task acceleration.

Empowering intelligence with physics, and advancing physics with intelligence — our group is dedicated to exploring new computing paradigms through the deep integration of physical systems and artificial intelligence, driving cross-disciplinary innovation in future intelligent computing architectures. We welcome students from diverse backgrounds—including physics, electrical engineering, computer science, and artificial intelligence—to join us in exploring the frontiers of intelligent computing.

References:

Wang et al., “Superior probabilistic computing using operationally stable probabilistic-bit constructed by manganite nanowire”, National Science Review 12, nwae338 (2025).

Niu et al., “A self-learning magnetic Hopfield neural network with intrinsic gradient descent adaption”, PNAS 121, e2416294121 (2024).

Yu et al., “Physical neural networks with self-learning capabilities”, Sci. China-Phys. Mech. Astron. 67, 287501 (2024).

Hu et al., “Distinguishing artificial spin ice states using magnetoresistance effect for neuromorphic computing”, Nature Communications 14,2562 (2023).

Niu et al., “Implementation of artificial neurons with tunable width via magnetic anisotropy” Appl. Phys. Lett. 119, 204101 (2021).